Architecture diagram

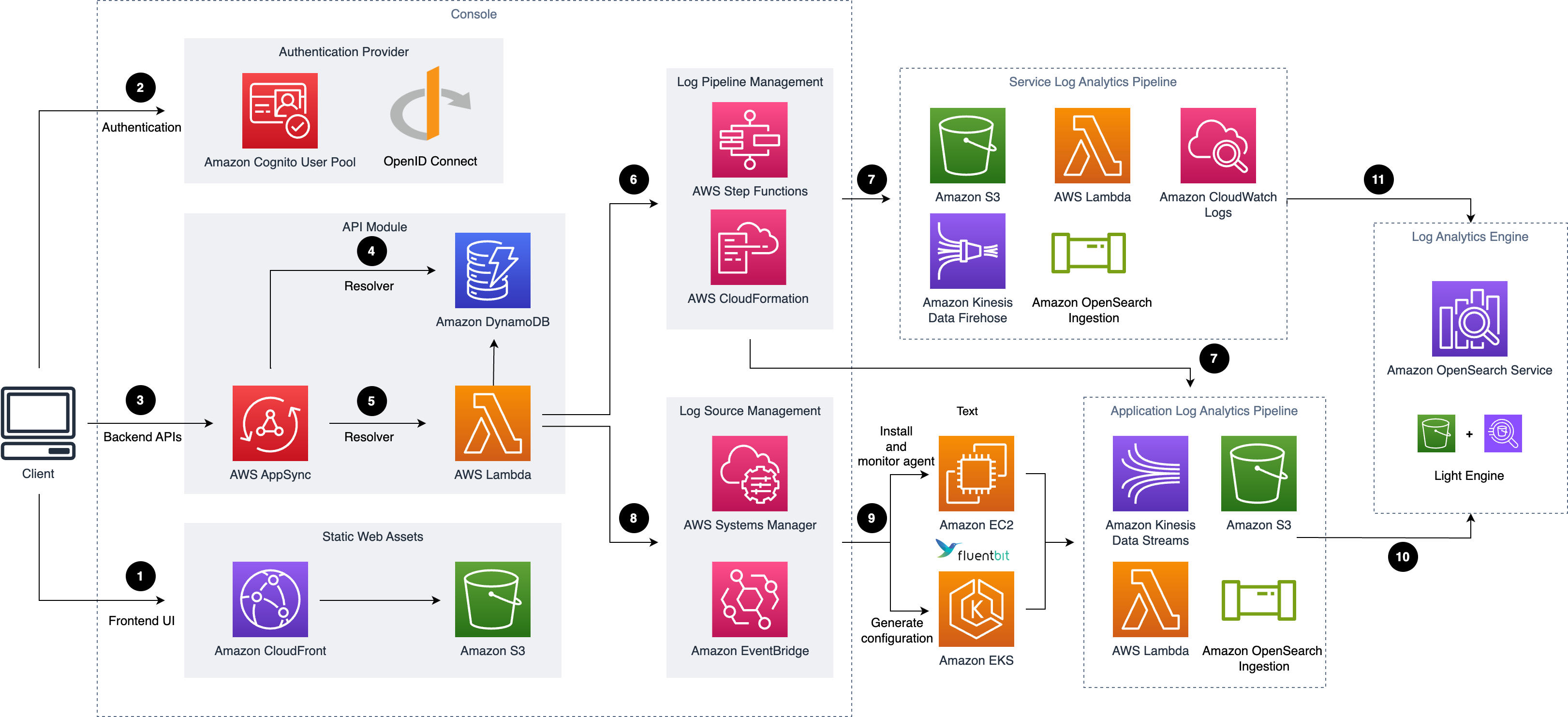

Deploying this solution with the default parameters builds the following environment in the AWS Cloud.

Centralized Logging with OpenSearch architecture

Centralized Logging with OpenSearch architecture

This solution deploys the AWS CloudFormation template in your AWS Cloud account and completes the following settings.

-

Amazon CloudFront distributes the frontend web UI assets hosted in Amazon S3 bucket.

-

Amazon Cognito user pool or OpenID Connector (OIDC) can be used for authentication.

-

AWS AppSync provides the backend GraphQL APIs.

-

Amazon DynamoDB stores the solution related information as backend database.

-

AWS Lambda interacts with other AWS Services to process core logic of managing log pipelines or log agents, and obtains information updated in DynamoDB tables.

-

AWS Step Functions orchestrates on-demand AWS CloudFormation deployment of a set of predefined stacks for log pipeline management. The log pipeline stacks deploy separate AWS resources and are used to collect and process logs and ingest them into Amazon OpenSearch Service for further analysis and visualization.

-

Service Log Pipeline or Application Log Pipeline are provisioned on demand via Centralized Logging with OpenSearch console.

-

AWS Systems Manager and Amazon EventBridge manage log agents for collecting logs from application servers, such as installing log agents (Fluent Bit) for application servers and monitoring the health status of the agents.

-

Amazon EC2 or Amazon EKS installs Fluent Bit agents, and uploads log data to application log pipeline.

-

Application log pipelines read, parse, process application logs and ingest them into Amazon OpenSearch domains or Light Engine.

-

Service log pipelines read, parse, process AWS service logs and ingest them into Amazon OpenSearch domains or Light Engine.

After deploying the solution, you can use AWS WAF to protect CloudFront or AppSync. Moreover, you can follow this guide to configure your WAF settings to prevent GraphQL schema introspection.

This solution supports two types of log pipelines: Service Log Analytics Pipeline and Application Log Analytics Pipeline.

Service log analytics pipeline

Centralized Logging with OpenSearch supports log analysis for AWS services, such as Amazon S3 access logs, and Application Load Balancer access logs. For a complete list of supported AWS services, refer to Supported AWS Services.

This solution ingests different AWS service logs using different workflows.

Note

Centralized Logging with OpenSearch supports cross-account log ingestion. If you want to ingest the logs from another AWS account, the resources in the Sources group in the architecture diagram will be in another account.

Logs through Amazon S3

This section is applicable to Amazon S3 access logs, CloudFront standard logs, CloudTrail logs (S3), Application Load Balancing access logs, WAF logs, VPC Flow logs (S3), AWS Config logs, Amazon RDS/Aurora logs, and AWS Lambda Logs.

The workflow supports two scenarios:

-

Logs to Amazon S3 directly(OpenSearch as log processor)

In this scenario, the service directly sends logs to Amazon S3.

-

Logs to Amazon S3 via Kinesis Data Firehose(OpenSearch as log processor)

In this scenario, the service cannot directly put their logs to Amazon S3. The logs are sent to Amazon CloudWatch, and Kinesis Data Firehose (KDF) is used to subscribe the logs from CloudWatch Log Group and then put logs into Amazon S3.

The log pipeline runs the following workflow:

-

AWS services logs are stored in Amazon S3 bucket (Log Bucket).

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates the Log Processor Lambda to run.

-

The log processor reads and processes the log files.

-

The log processor ingests the logs into the Amazon OpenSearch Service.

-

Logs that fail to be processed are exported to Amazon S3 bucket (Backup Bucket).

For cross-account ingestion, the AWS Services store logs in Amazon S3 bucket in the member account, and other resources remain in central logging account.

-

Logs to Amazon S3 directly(Light Engine as log processor)

In this scenario, the service directly sends logs to Amazon S3.

The log pipeline runs the following workflow:

- AWS service logs are stored in an Amazon S3 bucket (Log Bucket).

- An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

- Amazon SQS initiates AWS Lambda.

- AWS Lambda get objects from the Amazon S3 log bucket.

- AWS Lambda put objects to the staging bucket.

- The Log Processor, AWS Step Functions, processes raw log files stored in the staging bucket in batches.

- The Log Processor, AWS Step Functions, converts log data into Apache Parquet format and automatically partitions all incoming data based on criteria including time and region.

Logs through Amazon Kinesis Data Streams

This section is applicable to CloudFront real-time logs, CloudTrail logs (CloudWatch), and VPC Flow logs (CloudWatch).

The workflow supports two scenarios:

-

Logs to KDS directly

In this scenario, the service directly streams logs to Amazon Kinesis Data Streams (KDS).

-

Logs to KDS via subscription

In this scenario, the service delivers the logs to CloudWatch Log Group, and then CloudWatch Logs stream the logs in real-time to KDS as the subscription destination.

Amazon KDS (via subscription) based service log pipeline architecture

The log pipeline runs the following workflow:

-

AWS Services logs are streamed to Kinesis Data Stream.

-

KDS initiates the Log Processor Lambda to run.

-

The log processor processes and ingests the logs into the Amazon OpenSearch Service.

-

Logs that fail to be processed are exported to Amazon S3 bucket (Backup Bucket).

For cross-account ingestion, the AWS Services store logs on Amazon CloudWatch log group in the member account, and other resources remain in central logging account.

Warning

This solution does not support cross-account ingestion for CloudFront real-time logs.

Application log analytics pipeline

Centralized Logging with OpenSearch supports log analysis for application logs, such as Nginx/Apache HTTP Server logs or custom application logs.

Note

Centralized Logging with OpenSearch supports cross-account log ingestion. If you want to ingest logs from the same account, the resources in the Sources group will be in the same account as your Centralized Logging with OpenSearch account. Otherwise, they will be in another AWS account.

Logs from Amazon EC2 / Amazon EKS

- Logs from Amazon EC2/ Amazon EKS(OpenSearch as log processor)

Application log pipeline architecture for EC2/EKS

The log pipeline runs the following workflow:

- Fluent Bit works as the underlying log agent to collect logs from application servers and send them to an optional Log Buffer, or ingest into OpenSearch domain directly.

-

The Log Buffer triggers the Lambda (Log Processor) to run.

-

The log processor reads and processes the log records and ingests the logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket)

- Logs from Amazon EC2/ Amazon EKS(Light Engine as log processor)

Application log pipeline architecture for EC2/EKS

Application log pipeline architecture for EC2/EKS

The log pipeline runs the following workflow:

-

Fluent Bit works as the underlying log agent to collect logs from application servers and send them to an optional Log Buffer, or ingest into OpenSearch domain directly.

-

An event notification is sent to Amazon SQS using S3 Event Notifications when a new log file is created.

-

Amazon SQS initiates AWS Lambda.

-

AWS Lambda gets objects from the Amazon S3 log bucket.

-

AWS Lambda puts objects to the staging bucket.

-

The Log Processor, AWS Step Functions, processes raw log files stored in the staging bucket in batches.

-

The Log Processor, AWS Step Functions, converts log data into Apache Parquet format and automatically partitions all incoming data based on criteria including time and region .

Logs from Syslog Client

Important

- Make sure your Syslog generator/sender's subnet is connected to Centralized Logging with OpenSearch' two private subnets. You need to use VPC Peering Connection or Transit Gateway to connect these VPCs.

- The NLB together with the ECS containers in the architecture diagram will be provisioned only when you create a Syslog ingestion and be automated deleted when there is no Syslog ingestion.

-

Syslog client (like Rsyslog) send logs to a Network Load Balancer (NLB) in Centralized Logging with OpenSearch's private subnets, and NLB routes to the ECS containers running Syslog servers.

-

Fluent Bit works as the underlying log agent in the ECS Service to parse logs, and send them to an optional Log Buffer, or ingest into OpenSearch domain directly.

-

The Log Buffer triggers the Lambda (Log Processor) to run.

-

The log processor reads and processes the log records and ingests the logs into the OpenSearch domain.

-

Logs that fail to be processed are exported to an Amazon S3 bucket (Backup Bucket).